Jaehoon Choi

I am a Computer Science Ph.D. candidate at University of Maryland, College Park (UMD), working with Prof. Dinesh Manocha at the GAMMA Lab. I did my MS at KAIST, where I was advised by Changick Kim. I did my bachelors at the KAIST.

I’m a researcher working on 3D vision and neural rendering. In Summer 2025, I joined Qualcomm AI Research as a Research Intern, focusing on 3D Gaussian Splatting. Before that, I interned at Meta Reality Labs (2024) on shape-from-polarization algorithms, and at Meta (2023) on neural rendering for VR headsets. From 2020 to 2022, I worked at NAVER LABS as a Research Intern on robot vision and AR platform research.

Feel free to reach out if you're interested in research collaboration!

📬 kevchoi[AT]umd.edu | jaehoonc44[AT]gmail.com

Research

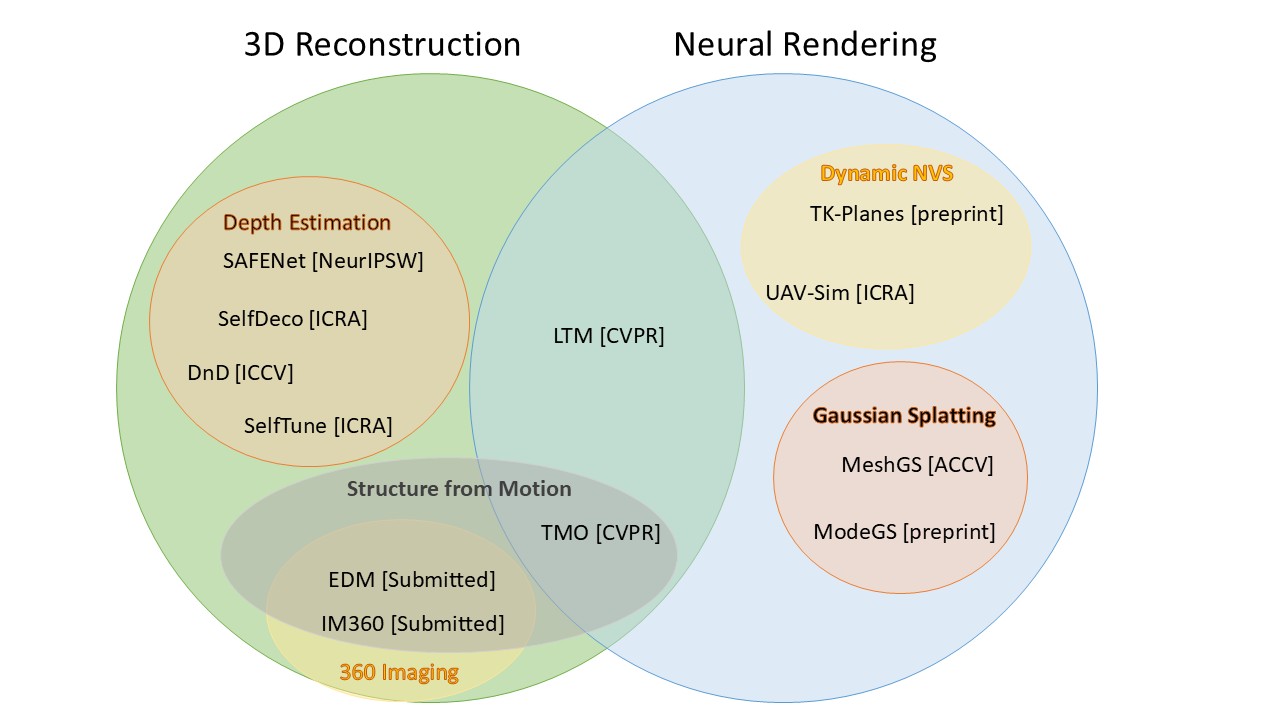

My research focuses on understanding and reconstructing the 3D world through two primary approaches: (1) generating precise 3D geometries and (2) producing photorealistic renderings of these environments.

My research focuses on understanding and reconstructing the 3D world through two primary approaches: (1) generating precise 3D geometries and (2) producing photorealistic renderings of these environments.

(1) Depth Estimation and Completion: SelfDeco (ICRA'21), DnD (ICCV'21), SelfTune (ICRA'22)

(2) Neural Reconstruction and Rendering: TMO (CVPR'23), LTM (CVPR'24), MeshGS (ACCV'24), TK-Planes (IROS'25), UAV4D (AAAI'26)

(3) Neural Data Generation and Domain Adaptation: UAV-Twin (Preprint), UAV-Sim (ICRA'24), SEGDA (ICCV'19), STUDA (ICCV'19)

(4) 360 Imaging: EDM (CVPR'25), IM360 (ICCV'25), RPG360 (NeurIPS'25)

(5) 3D Foundation Model: MoRe (WACV'26)

Recent News

🌟 Selected for Doctoral Consortium at ICCV 2025|

|

Yonghan Lee, Tsung-Wei Huang, Shiv Gehlot, Jaehoon Choi, Guan-Ming Su, Dinesh Manocha [arXiv] We present a novel multi-video 4D Gaussian Splatting (4DGS) approach designed to handle real-world, unsynchronized video sets. |

|

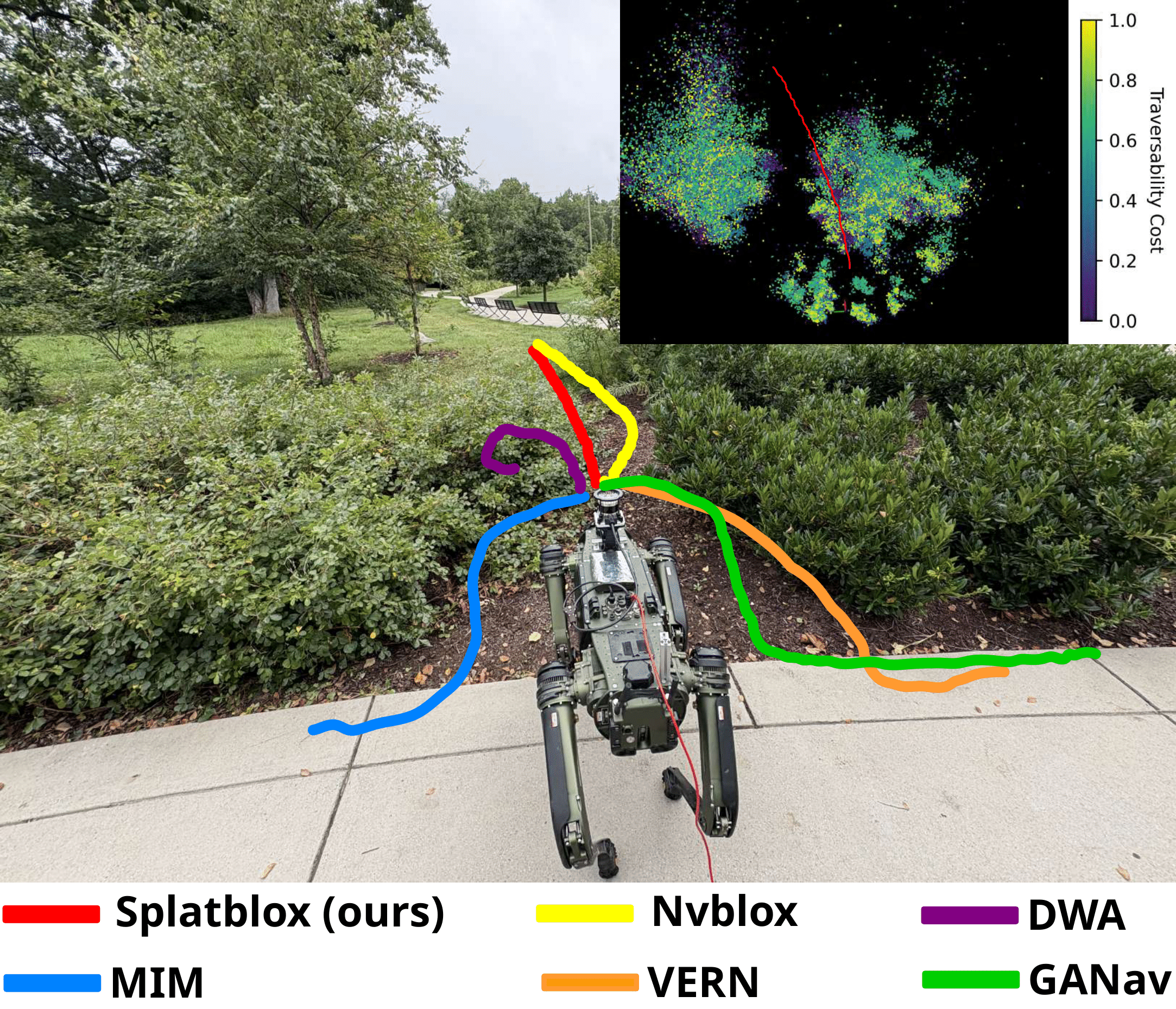

Samarth Chopra, Jing Liang, Gershom Seneviratne, Yonghan Lee, Jaehoon Choi, Jianyu An, Stephen Cheng, Dinesh Manocha [arXiv] [Project] We present Splatblox, a real-time system for autonomous navigation in outdoor environments with dense vegetation, irregular obstacles, and complex terrain. |

|

Jaehoon Choi, Dongki Jung, Yonghan Lee, Sungmin Eum, Dinesh Manocha, and Heesung Kwon [arXiv] [Project] We present a method for creating digital twins from real-world environments and facilitating data augmentation for training downstream models embedded in unmanned aerial vehicles (UAVs). |

|

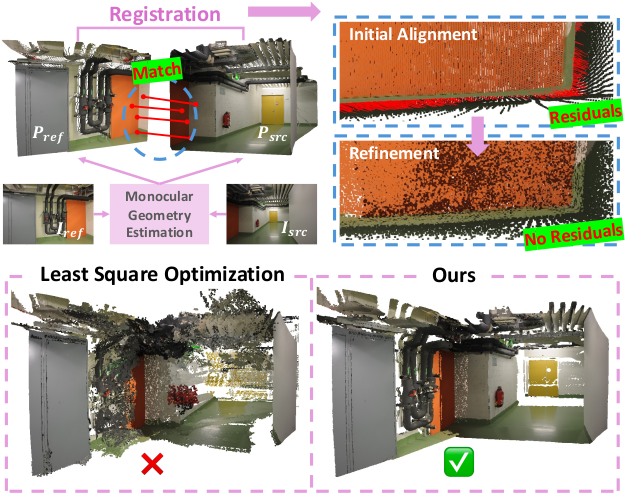

Dongki Jung, Jaehoon Choi, Yonghan Lee, Sungmin Eum, Heesung Kwon, and Dinesh Manocha WACV, 2026 [arXiv] We propose MoRe, a training-free Monocular Geometry Refinement method designed to improve cross-view consistency and achieve scale alignment. |

|

|

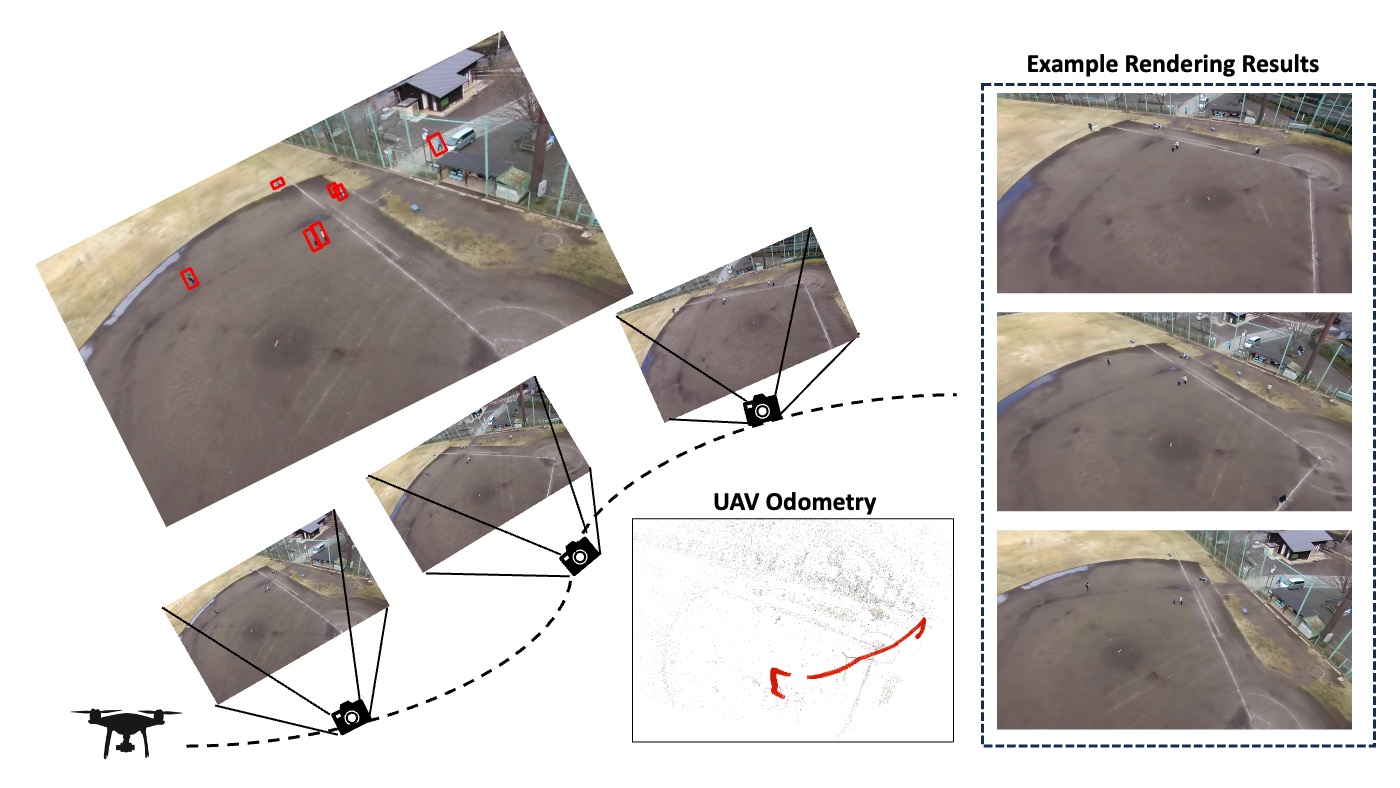

Jaehoon Choi, Dongki Jung, Christopher Maxey, Sungmin Eum, Yonghan Lee, Dinesh Manocha, and Heesung Kwon AAAI, 2026 [arXiv] [Project] We introduce UAV4D, a framework for enabling photorealistic rendering for dynamic real-world scenes captured by UAVs. |

|

|

Dongki Jung, Jaehoon Choi, Yonghan Lee, Dinesh Manocha NeurIPS, 2025 [arXiv] [Project] We propose a training-free method for 360-degree depth estimation by combining perspective foundation models with graph-based scale alignment. |

|

Jaehoon Choi*, Dongki Jung*, Yonghan Lee, Dinesh Manocha (* These two authors contributed equally) ICCV, 2025 [arXiv] [Project] We propose a complete pipeline for indoor mapping using omnidirectional images, consisting of three key stages: (1) Spherical SfM, (2) Neural Surface Reconstruction, and (3) Texture Optimization. |

|

Christopher Maxey, Jaehoon Choi, Yonghan Lee, Hyungtae Lee, Dinesh Manocha, and Heesung Kwon IROS, 2025 [arXiv] We propose an extension of K-Planes Neural Radiance Field (NeRF), wherein our algorithm stores a set of tiered feature vectors. |

|

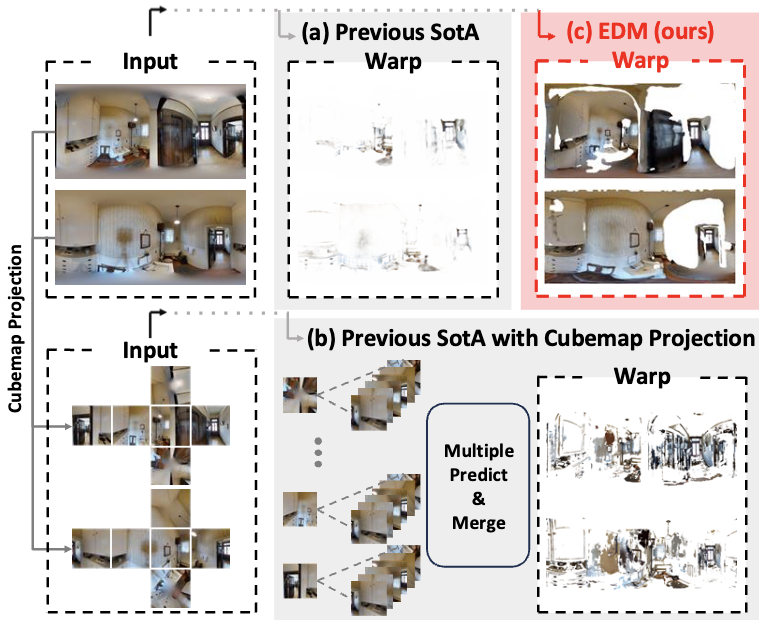

Dongki Jung, Jaehoon Choi, Yonghan Lee, Somi Jeong, Taejae Lee, Dinesh Manocha, Suyong Yeon CVPR, 2025 [arXiv] [Project] We propose the first learning-based dense matching algorithm for omnidirectional images. |

|

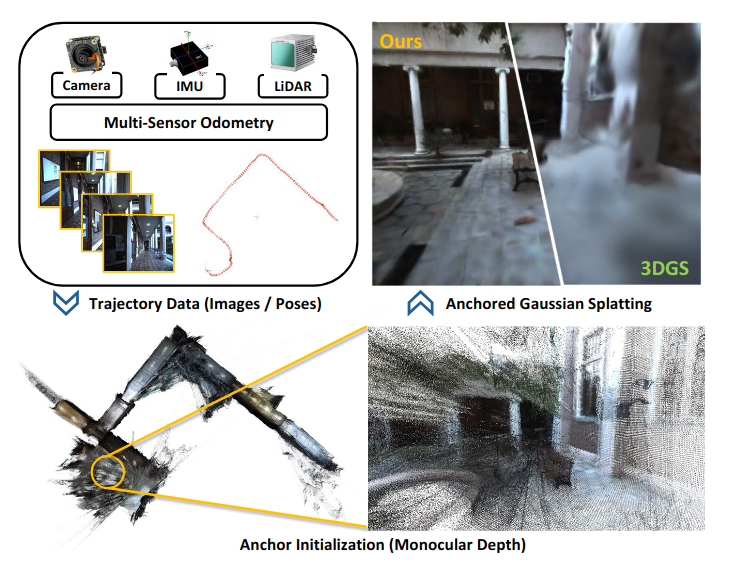

Yonghan Lee, Jaehoon Choi, Dongki Jung, Jaeseong Yun, Soohyun Ryu, Dinesh Manocha, and Suyong Yeon Preprint [arXiv] We propose a novel 3D Gaussian splatting algorithm that integrates monocular depth network with anchored Gaussian splatting, enabling robust rendering performance on sparse-view datasets. |

|

|

Jaehoon Choi, Yonghan Lee, Hyungtae Lee, Heesung Kwon, and Dinesh Manocha ACCV, 2024 [arXiv] We propose a novel approach that integrates mesh representation with 3D Gaussian splats to perform high-quality rendering of reconstructed real-world scenes. |

|

|

Jaehoon Choi, Rajvi Shah, Qinbo Li, Yipeng Wang, Ayush Saraf, Changil Kim, Jia-Bin Huang, Dinesh Manocha, Suhib Alsisan, and Johannes Kopf CVPR, 2024 [arXiv] [Project] We present a practical method for reconstructing and optimizing textured meshes of large, unbounded real-world scenes that offer high visual and geometric fidelity. |

|

|

Jaehoon Choi*, Christopher Maxey*, Hyungtae Lee, Dinesh Manocha, Heesung Kwon (* These two authors contributed equally) ICRA, 2024 [arXiv] [Video] We present a synthetic data generation pipeline based on NeRF for UAV-based perception |

|

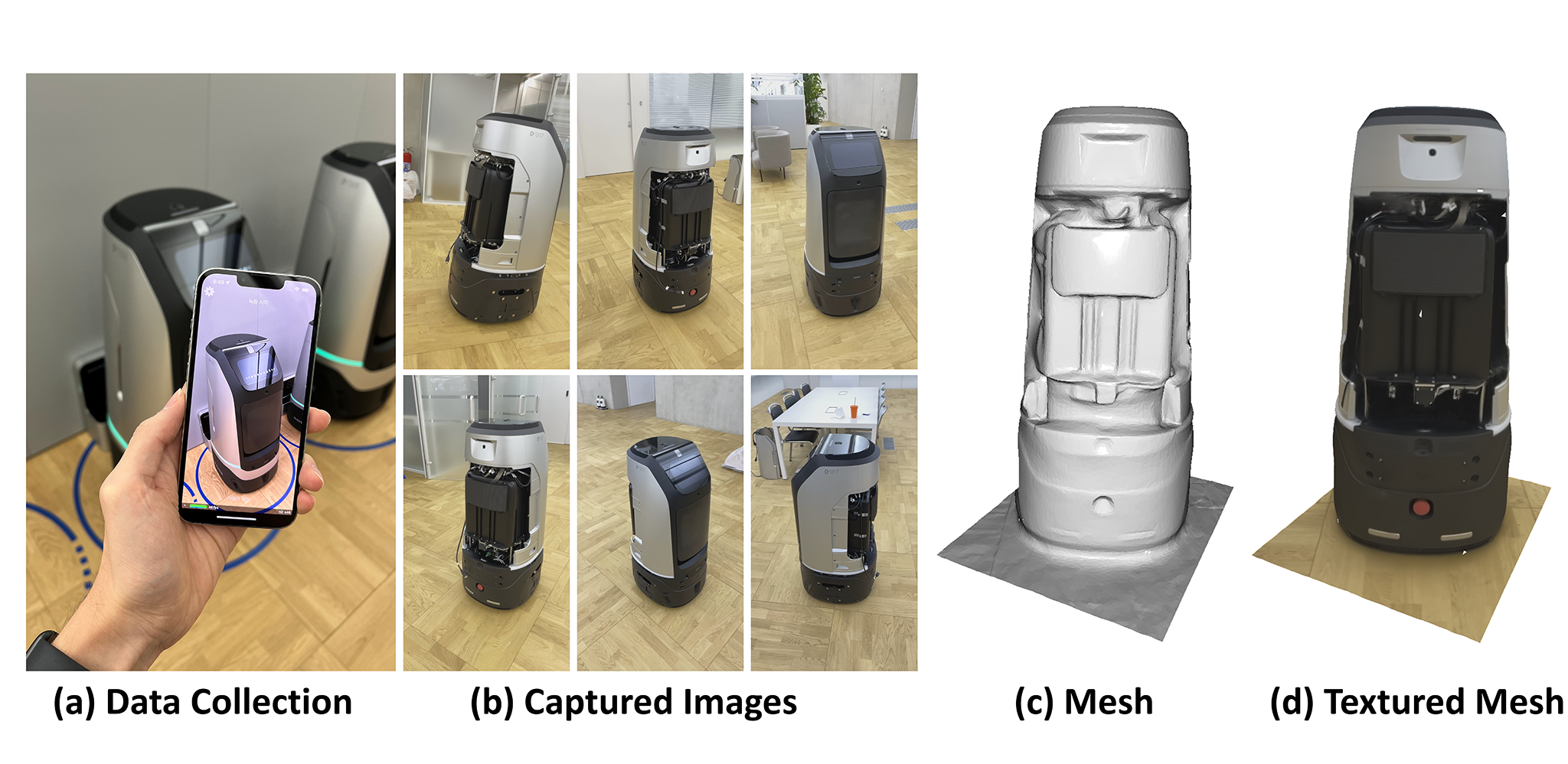

Jaehoon Choi, Dongki Jung, Taejae Lee, Sangwook Kim, Youngdong Jung, Dinesh Manocha, Donghwan Lee CVPR, 2023 [arXiv] [Project] We present a new pipeline for acquiring a textured mesh in the wild with a single smartphone. |

|

Jaehoon Choi*, Dongki Jung*, Yonghan Lee, Deokhwa Kim, Dinesh Manocha, Donghwan Lee (* These two authors contributed equally) ICRA, 2022 [arXiv] We have developed a fine-tuning method for metrically accurate depth estimation in a self-supervised way. |

|

Jaehoon Choi*, Dongki Jung*, Yonghan Lee, Deokhwa Kim, Changick Kim, Dinesh Manocha, Donghwan Lee (* These two authors contributed equally) ICCV, 2021 [arXiv] [Supp.] We present a novel approach for estimating depth from a monocular camera as it moves through complex and crowded indoor environments. |

|

|

Taekyung Kim, Jaehoon Choi, Seokeon Choi, Dongki Jung, Changick Kim ICCV, 2021 [arXiv] We first introduce a novel semi-supervised multi-view stereo framework. |

|

|

Jaehoon Choi, Dongki Jung, Yonghan Lee, Deokhwa Kim, Dinesh Manocha, Donghwan Lee ICRA, 2021 [arXiv] We present a novel algorithm for self-supervised monocular depth completion in challenging indoor environments. |

|

|

Jaehoon Choi*, Dongki Jung*, Donghwan Lee, Changick Kim (* These two authors contributed equally) NeurIPS Workshop on Machine Learning for Autonomous Driving, 2020 [arXiv] We propose SAFENet that is designed to leverage semantic information to overcome the limitations of the photometric loss. |

|

|

Dongki Jung, Seunghan Yang, Jaehoon Choi, Changick Kim ICIP, 2020 [arXiv] We present a novel learnable normalization technique for style transfer using graph convolutional networks |

|

|

Jaehoon Choi, Taekyung Kim, Changick Kim ICCV, 2019 [arXiv] We propose a novel framework consisting of two components: Target-Guided and Cycle-Free Data Augmentation and Self-Ensembling algorithm |

|

|

Seunghyeon Kim, Jaehoon Choi, Taekyung Kim, Changick Kim ICCV, 2019 (Oral) [arXiv] We introduce a weak self-training (WST) method and adversarial background score regularization (BSR) for domain adaptive one-stage object detection. |

|

|

Jaehoon Choi, Minki Jeong, Taekyung Kim, Changick Kim BMVC, 2019 [arXiv] We propose a pseudo-labeling curriculum based on a density-based clustering algorithm. |

|

Service |

|

Conference Reviewer CVPR 2020, WACV 2021, ACCV 2020, AAAI 2021, ICRA 2021 Chosen as one of 66 outstanding reviewers of ACCV 2020 |

|

Teaching Assistant, CMSC216: Introduction to Computer Systems Fall 2025

Teaching Assistant, CMSC733: Computer Processing of Pictorial Information Fall 2022 Teaching Assistant, CMSC250: Discrete Structure Fall 2021 Teaching Assistant, CMSC426: Computer Vision Spring 2021 |

|

Teaching Assistant, EE838–Special Topics in Image Engineering Optimization for Computer Vision Spring 2019 Student Tutor for Foreign Students: Introduction to Programming, CS101 2019 |

|

|